Improve Feedback with a “Highlighter Rubric”

If you’ve read my blog, or subscribed to my newsletter, you’re probably familiar with my attitude towards analytic rating scales. As I wrote in “Essay Rubrics: Why They are Hell, and how to Design Them Better:”

Essay rubrics are supposed to pull the evaluation of writing into the realm of the objective. A rubric is supposed to be a step toward empiricism. It’s supposed to reduce the complex reality of a student’s cognitive work and expression into a series of discrete, observable realities.

However, in my experience, teachers don’t work inductively when writing rubrics. This is the “sloppy” part of Sloppy Positivism.

I still believe that. But more recently, my reflections on rubrics led me to new questions: how might a teacher work more inductively? And would doing so increase the value of an analytic scoring rubric for the student?

“For-Real” Induction in Scoring Rubrics

So, teachers, tell me — if you’ve used an analytic scoring rubric, be honest: how often do you hover over the different boxes, and then go with some kind of “gut” decision?

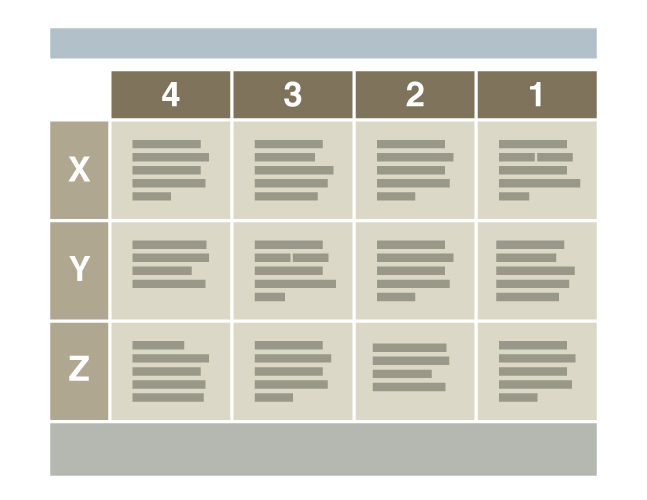

Say you’re grading an essay, using the 12-point rubric above. Your grading for the category “X” — “organization” maybe. And you’ve read over the essay, made a few brief comments, and now you have to decide, 1, 2, 3, or 4? Does the language in “4” describe the essay’s organization? Well, maybe. Does the language in “3” describe the essay’s organization? Sort of. Yeah, let’s go with “3.”

If I’m honest, that describes pretty closely my mental operation of using a rubric like this. But guess what? That’s not inductive. That’s deductive. Trying to fit the overall impression of the essay’s organization to the generalized descriptions in the boxes on the rubric.

My hunch is, most of us don’t realize we are operating deductively when we suppose we are scoring inductively with an analytic scoring rubric. The argument for rubrics is, usually, since we are avoiding a holistic grade (which is easily influenced by mood, bias, etc.) the resulting grades are more “objective.” But, in practice, this supposedly objective method of scoring ends up being just piecemeal holism.

From the student’s perspective, that means the experience of reviewing a scored rubric is usually frustrating. They might score more strongly in certain areas, but can’t celebrate it; a student sees that they’ve been nickel-and-dimed to a B-. The language in the boxes, since it is generalized, does not offer a clear path to improvement–is not actionable feedback.

So my thought was, could I develop a time-effective way to stay true to the inductive intentions of an ASR? And might this give students more useful, useable feedback?

Using Highlighters to Flag Support

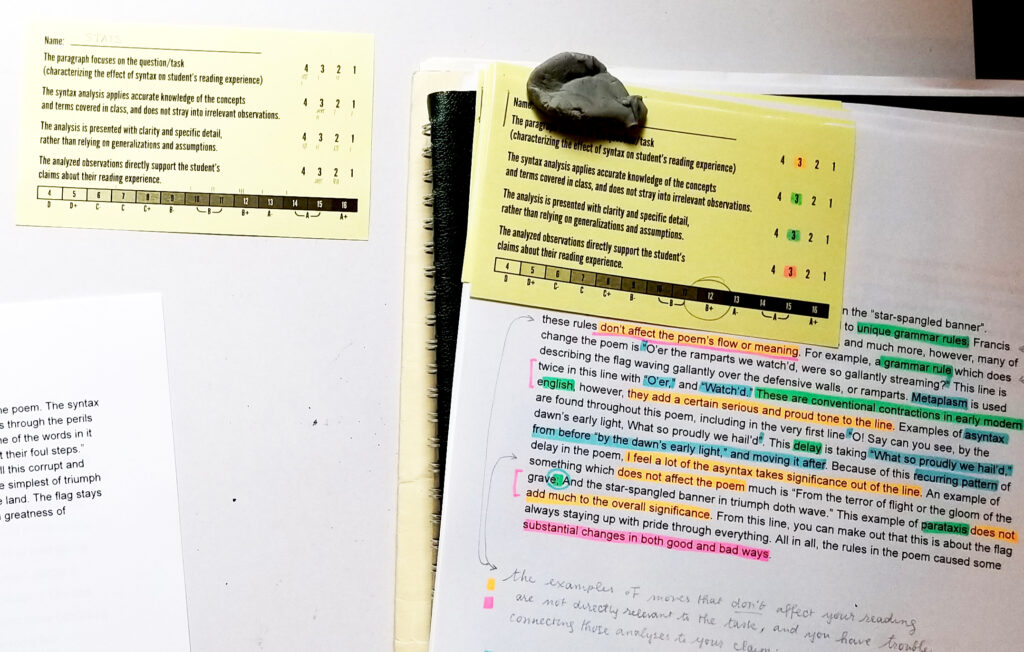

Let’s look at an example. The prompt was something like, “Discuss how the Francis Scott Key’s syntax choices affected your reading experience.” I asked them to write one paragraph.

Here’s what I tried. Rather than developing generalized descriptions for each score/category, I only described the success in each category (what might typically go in the “A” column of an analytic scoring rubric). I avoided categories that were not relevant to the skills I was assessing. (No categories for “organization” or “mechanics,” etc.)

- The paragraph focuses on the question/task (characterizing the effect of syntax on the student’s reading experience)

- The syntax analysis applies accurate knowledge of the concepts and terms covered in class, and does not stray into irrelevant observations

- The analysis is presented with clarity and specific detail, rather than relying on generalizations and assumptions

- The analyzed observations directly support the student’s claims about their reading experience.

With that set up, I pulled out my set of highlighters. I chose a color for each category — orange, green, blue, pink. And I made it my job to find and flag evidence for each category in the student’s paragraph.

I found I needed a strategy for more holistic comments, or for highlights that I felt needed some explanation. So I developed the additional strategy of marking a “square” in the appropriate color, like a kind of footnote, to help the students connect the comment to the appropriate category.

The Result: A More Useful Feedback Instrument

The result was a rubric that was more than just a “justification” of my scores in the different categories. It guided my thinking to be more inductive: I was able to use the highlighting to visually review the evidence influencing my score for each category.

And finally, it was a more useful feedback instrument for my students. The student in the pictured example received a B+. But, the highlighting presented the areas they could focus their attention on for each category. I made the important decision NOT to explain if each highlight was pointing out something positive or negative. My goal was that a student wishing to revise would have to analyze and reflect on those highlighted areas to decide which ones to revise, and how.

And as a result, in fact, a good number of students initiated follow-up conversations. We were able to form quick-and-dirty revision plans that left them feeling (I think) more agency surrounding how they were being assessed.

Was it Worth the Effort?

Was grading with four highlighters more work? Yes. But hear me out.

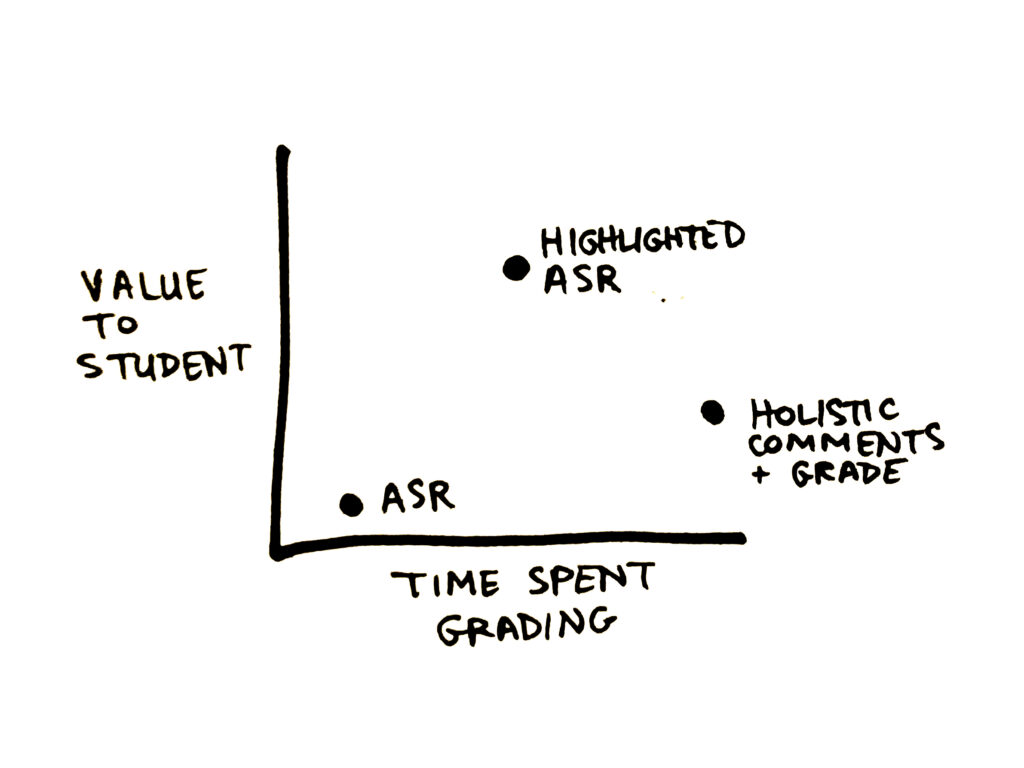

When it comes to grading, I’m always thinking about time spent vs. value. Nothing is more frustrating that spending hours and hours writing carefully worded holistic comments — only to have the student flip to the end, check the grade, and chuck the paper in the trash.

I get the appeal, therefore, of the ASR. Scoring on a classic analytic scoring rubric reduces the teacher’s time investment. But also it reduces the value of the feedback, for those students who wish to use it and improve.

I found that grading with this highlighter method was somewhere in between in terms of time investment. But based on the number of productive follow-up conversations, much more valuable to the students for the “price” of time spent. To close, here’s an informal, unscientific graph: